Who Was / Is Philip Agre?

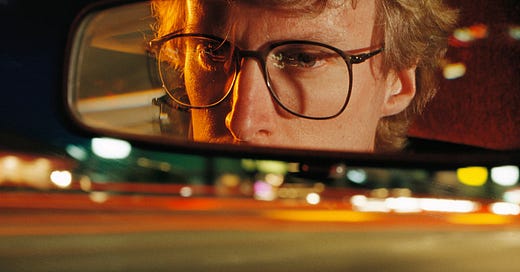

Thirty years ago, the pioneering computer scientist and humanities professor predicted a world in thrall to AI. Now that it's arrived, he's nowhere to be found.

I haven’t been dipping into the news lately because, well…the news.

But I’m glad I stumbled upon Reed Albergotti’s Washington Post profile of former UCLA professor Philip Agre. Thirty years ago, Agre predicted computers—specifically AI—would upend nearly everything we understood about privacy, the manipulation of behavior and belief, and the blurry line between information and misinformation.

I’m not qualified to discuss Agre’s work in any meaningful depth. But what grabbed me was that as a mathematician and an expert in how computers “think,” Agre instead wanted to understand AI on a philosophical level. Frustrated by the lack of humanism in his technical grounding, he decided instead to learn how to read philosophy on its terms—not his. It transformed him into something essential and vanishingly rare: A person who understood how AI worked on a nuts-and-bolts level, but could grasp it on a human one too. And what he saw frightened him.

Agre saw that AI wasn’t neutral, and its development and deployment could have profound ethical and political consequences.

I don’t know whether Agre would have labeled himself a “cyberpunk,” but he predicted that AI research, particularly in fields like machine learning and robotics, would inevitably favor the interests of powerful institutions such as corporations and governments. This technology is already being used for purposes we half-understand—like swaying elections—and those we don’t, like how the virtual world affects the brain development of those born since its rise.

At first, Agre’s work didn’t have much effect outside academic circles. Now, people are wondering why it didn’t. Complicating matters, Agre himself left the conversation. Nearly twenty years ago he dropped out, hard. Friends reported him missing. When former colleagues attempted to compile his writing, Agre waved them off.

In The Fall of Troy, King Eurypylus opens a trunk left by the Trojan priestess Cassandra. Glimpsing an image of the god Dionysus inside, he goes mad.

What did Philip Agre see? I don’t know more than anyone else about his story, but for reasons I can’t quite pin down, I find something deeply touching and poignant about it. Others seem to agree; since first publishing this piece in 2021, a handful of people have commented or sent me notes. Some are straight-up conspiracy theorists; others former students who admired him. But if Agre himself if still out there, he’s keeping his thoughts to himself.

Wherever he is, I hope Philip Agre has found peace. Seeing into the future is a heavy burden indeed.

Great piece! Really enjoyed it and tuned me into an interesting research topic — here’s a gift link to the Post piece for those who don’t have a subscription: https://wapo.st/41jHhpF